This post is a longer-than-normal summary of our experience using iBeacons in the Hidden Museum project – intended to document a few pointers for anyone considering iBeacons for their own indoor navigation system. Caution…. this does veer more toward the technical underbelly of the project, rather than the user-facing experience… which is covered in a separate post.

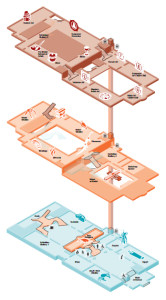

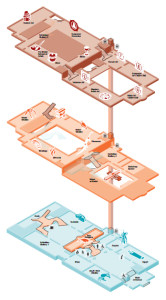

To give this all a bit more context, our basic setup is this: 1) A whole load of iBeacons placed around all three floors of the museum, 2) a device which uses their signals to calculate where it is in the museum, which 3) also uses its own compass to know which way it’s pointing. With these three tools our users can navigate a generated tour of the museum, getting lead from room to room, floor to floor, with an app that reacts when they reach each destination on the tour.

Spoiler alert… the system works!

The beginning

From the outset our fundamental technical goal was to accurately guide a single device on a physical journey around the museum, and have it react when it reaches multiple, flexible locations.

iBeacons?

iBeacons emit a signal that can be picked up by a mobile device, and the strength of that signal tells the device a rough distance between it and the iBeacon. With a few assumptions, this suggests that the technology allows a mobile device to pinpoint it’s position within a known indoor space – the two most obvious methods being triangulation-style positioning and hotspot check-in systems.

We opted for the triangulation method – as in theory if it was successful we would be able to apply to the system to any space, and cater for all sorts of navigation methods…. Particularly when used in conjunction with the device compass.

Brands

If you’ve started looking into procuring iBeacons you’ll know there are loads of suppliers to pick from, and it’s not easy to see the difference (in many cases there isn’t much). After assessing a range of brands including BlueSense, Glimworm and the beautifully presented Esitmote, we opted for Kontakt… primarily as they have easily replaceable batteries, easily configurable, are the right price, supply in volume (we needed a lot), and are visually discrete. Here’s a list of them:

Placement and security

The triangulation method requires a large number of iBeacons throughout the museum building in precise locations – effectively creating a 3D grid of signals. These need to be out of reach and ideally invisible to both the public and staff, as otherwise they might be accidentally moved, tampered-with or even taken… any of which will cause serious navigation bugs in our software. This meant that colourful and attractive iBeacons such as Estimote were out of the picture for this project.

Software choices

We decided to implement the navigation system in Unity 3D. Although it’s primarily a game engine, it is where our core mobile experience lies, it satisfies the cross-platform requirements of real world implementations, it is popular and has super-low barrier to entry with developers, and has very little reliance on proprietary tech.

Triangulation method in Unity

So… how best to implement triangulation in Unity? We take the perceived distance from all ‘visible’ iBeacons, and from that we work out the precise position of the device. After a few sprints of getting neck deep in advanced mathematics, we opted to use Unity’s built-in physics engine to do the heavy lifting for us… using Spring Joints from each iBeacon to automagically position the device on a virtual map, based on perceived distances from each iBeacon in range, allowing Unity to perform the complex maths for us.

So… how best to implement triangulation in Unity? We take the perceived distance from all ‘visible’ iBeacons, and from that we work out the precise position of the device. After a few sprints of getting neck deep in advanced mathematics, we opted to use Unity’s built-in physics engine to do the heavy lifting for us… using Spring Joints from each iBeacon to automagically position the device on a virtual map, based on perceived distances from each iBeacon in range, allowing Unity to perform the complex maths for us.

Below is a vid of an early test in the Aardman atrium – displaying the device’s perceived position and direction within a virtual 3D model of the building, as the user walks around. The bright-coloured areas on the mobile display are the two doorways. We’re not embarrassed to say that when we got this working it blew our minds a little bit.

Below is a vid of an early test in the Aardman atrium – displaying the device’s perceived position and direction within a virtual 3D model of the building, as the user walks around. The bright-coloured areas on the mobile display are the two doorways. We’re not embarrassed to say that when we got this working it blew our minds a little bit.

Reliability

For a triangulation system to work effortlessly the distance data it’s based on needs two things: to be accurate, and to be updated frequently.

IBeacon distance readings tend to be fairly inaccurate – with meaningful variance even in the best conditions (up to 3 metres out), and much worse in bad conditions (physical interference such as pillars or people, and electrical interference such as laptops or mobile devices). Accuracy does tend to increase the closer the iBeacons are to the device.

Frequency is also an issue. Users move around a museum space surprisingly fast… and with our system only able to read signals once a second or so it requires a lot of smoothing on the positioning data to avoid flip-outs every time an update occurs.

The compass

The compass is a tricky little system to wrangle. It is 100% reliant on the accuracy of the device’s hardware and software… which isn’t great when it comes to smart phones and tablets. Even in the best conditions digital compasses are likely to be anywhere up to 20% inaccurate (see http://www.techhive.com/article/2055380/six-iphones-tested-and-they-cant-agree-on-true-north.html) – and in bad conditions (such as an indoor space with lots of electrical interference and organic, metal or stone structures everywhere) we’ve witnessed the reading to be out by up to 90 degrees… really not ideal for leading users around a space accurately.

Three-dimensional placement

We knew that iBeacons work on distance, and so therefore the height at which we placed them would make a difference. But – perhaps naively -we didn’t expect this to cause much of an issue, so long as it was consistent. We didn’t take into consideration how powerfully the signals could penetrate through floors and ceilings… and certainly didn’t foresee issues caused by open atriums and balconies.

We knew that iBeacons work on distance, and so therefore the height at which we placed them would make a difference. But – perhaps naively -we didn’t expect this to cause much of an issue, so long as it was consistent. We didn’t take into consideration how powerfully the signals could penetrate through floors and ceilings… and certainly didn’t foresee issues caused by open atriums and balconies.

The Bristol museum and Art Gallery is a complicated building, with vast rooms (some without ceilings), small rooms, corridors, stairwells, about different 6 levels over three defined floors, and even galleries overlooking other rooms as balconies.

In such a space not only is it difficult to find a consistent position in which to place the iBeacons – there are many opportunities for the device to get a bit confused about what floor it’s on…. particularly when it’s picking up signals from the floors above and below, which happen to be stronger than the closest signals from the room it’s physically in.

With a standard GPS system this would be like expecting it to tell you not just what side of the multi-storey carpark you’re in, but which level of it you’re on. And while iBeacon triangulation is vastly easier in simple environments that can be mapped in two dimensions, it is still possible in three – and we actually did it in the end.

Handling shortcomings

So… there are a number of technical issues covered in this post, and each of them has led us to simplify and adapt the experience – even though the underlying tech is largely the same. We quickly learned to accept the huge variance in the quality, accuracy and timeliness of the data our navigation is based on, and soften the blow as much as possible so that the user’s experience isn’t affected:

- The inaccuracies and signal latency of iBeacons led us to free our user experience from relying on pin-point positioning – and rather round up to the user’s position to just the room they’re definitely in.

- The compass inaccuracies lead us to not rely on the compass to lead user around footstep by footstep – but rather to just occasionally find their bearings when stationary.

- The issues caused by three dimensional inaccuracies lead us to create navigation logic that only recognises movement between adjacent rooms…. So if that if the triangulation data suddenly starts suggesting the device has changed floor, it only recognises it if the user has just left an appropriate stairwell or lift area.

What’s brilliant about these solutions are how they each have significant emergent benefits to the overall user experience. Our users are not staring at the phone constantly, causing them to trip over bags and other visitors, and they’re using their brains and senses and communication to guide themselves.

All of these user experience developments and considerations will be covered in a separate post on this blog.

Summary

While iBeacons may not provide the perfect navigation system, they really aren’t a bad approach for both indoor and outdoor navigation – particularly if you go in with your eyes open to the potential issues. We achieved a slick and functional product, learning loads as we went… and hopefully this post has highlighted the issues to watch out for in your own iBeacon implementations. Thanks for reading!

This blog post acts as ‘milestone 3’ evidence Doc 3.1, 3.2 3.3, 3.4 & 3.5 (video test gif)

We also worked a bit on improving how our opening times are displayed. We added the option to add ‘notes’ to particular days, which is mainly for

We also worked a bit on improving how our opening times are displayed. We added the option to add ‘notes’ to particular days, which is mainly for

So… how best to implement triangulation in Unity? We take the perceived distance from all ‘visible’ iBeacons, and from that we work out the precise position of the device. After a few sprints of getting neck deep in advanced mathematics, we opted to use Unity’s built-in physics engine to do the heavy lifting for us… using Spring Joints from each iBeacon to automagically position the device on a virtual map, based on perceived distances from each iBeacon in range, allowing Unity to perform the complex maths for us.

So… how best to implement triangulation in Unity? We take the perceived distance from all ‘visible’ iBeacons, and from that we work out the precise position of the device. After a few sprints of getting neck deep in advanced mathematics, we opted to use Unity’s built-in physics engine to do the heavy lifting for us… using Spring Joints from each iBeacon to automagically position the device on a virtual map, based on perceived distances from each iBeacon in range, allowing Unity to perform the complex maths for us.

We knew that iBeacons work on distance, and so therefore the height at which we placed them would make a difference. But – perhaps naively -we didn’t expect this to cause much of an issue, so long as it was consistent. We didn’t take into consideration how powerfully the signals could penetrate through floors and ceilings… and certainly didn’t foresee issues caused by open atriums and balconies.

We knew that iBeacons work on distance, and so therefore the height at which we placed them would make a difference. But – perhaps naively -we didn’t expect this to cause much of an issue, so long as it was consistent. We didn’t take into consideration how powerfully the signals could penetrate through floors and ceilings… and certainly didn’t foresee issues caused by open atriums and balconies.