So quite a few things have happened since my last blog post here… Notably on our end, the Mshed Bus Interactive!

Over the covid period using part of our grant from the Art’s Council’s Culture Recovery Fund we decided to make our iconic Lodekka Bus an interactive installation aimed at sending kids round the museum to find objects in our collection.

The goal was to create a new way of revitalising Mshed with covid safe interactivity, specifically using the Lodekka Bus. The bus is a significant part of our collection and was accessible on both floors before covid, however due to the pandemic it currently remains closed for a period of nearly 2 years as of typing.

How it works

We wanted to add to the bus and give it some life back in these times and do this in a way that if it is to be opened again, would not restrict access, but add to the experience. Therefore a project was commissioned to project interactive characters in the windows. These characters (specifically from the bottom 3 windows of the bus’s left side) can be waved at and will respond to this with a story about an object in our collection.

The interactive as shown below projects onto 9 of the windows on the entrance side of the bus, and has a conductor character on the TV next to the Lodekka, signposting people to the interactive. Each of the 3 interactive windows has a hand icon that fills up based on how close it is to being activated by waving.

How it works (The Nerd Version)

The system uses 3 Azure Kinects, each hooked up to their own computer, equipped with an 8 core i7 processor and RTX Graphics card. The 3 PC’s are hooked up to 4 projectors (one machine handling two projectors), this gives each machine one Azure Kinect hooked up to one of the 3 interactive windows on the bottom floor of the bus. All the PC’s run the same Touchdesigner project and talk to each other in order to coordinate what characters are doing in the bus windows depending on which sensor is triggered.

The characters are premade animations with each video circling back to the same start and end frame in order for videos to change over seamlessly, each projector covers 2 windows so 2 characters per projector. The bus windows are covered in Contravision which enables you to see the image whilst also being able to see inside the bus and outside the bus from the inside.

Touchdesigner also allows us to projection map the videos to the windows making them work perfectly in situ. The wave detection is able to tell when the hand is both raised and moving and a threshold is set for the amount of said motion. Visual feedback is given in a hand icon which shows the level the threshold is currently at. Once the threshold is passed the sensor has detected a wave and will change the video content, the character will then tell you about an object in the museum. As the system works on changing videos over the characters can be changed over with new characters whenever we want them created.

Research/Procurement

I was given the task of researching the technical side as a whole to make sure this will work, most notably being able to get the system to recognise waving as a trigger for content and being able to make this work with what hardware is available and find a developer who could pull this off.

This was a leviathan of a project to pull off in the timeframe and we managed to make use of some fantastic developments in interactive technology to achieve this. Most notably Azure Kinect sensors and Touchdesigner, which is a real time visual development platform that allows you to create interactive installations with less code and is visual programming which allows for quicker development.

It’s a software package I’ve been interested in for a while as it allows you to mock up interactives using sensors at a much quicker pace than coding them, as most bits of code you would need to join up use of different devices and multimedia are built into the software. It’s also not restrictive in that you can still use Python within Touchdesigner to add functionality where there is no native way of achieving what you want.

The timeframe to get this project on the go was set at the peak of covid and was restrictive for numerous reasons, notably electronic supply chains suffering, no ability to do testing of sensors on more than one person and restricted access to site affecting testing and brainstorming of the project concept and logistics.

In particular this made researching if a robust enough sensor for detecting a wave was readily available to us with a developer who can work with it and hardware powerful enough to run the detection software. After getting hold of sensors we decided that the most robust option was going to be to use Azure Kinects, which have solid skeletal tracking, which is what we use to detect waving.

Due to how niche this project was, finding a developer that was able to pull this off was difficult. Freelancers were definitely the option as few companies are willing to take this on without doing the entire project (content as well as hardware), let alone not charging an astronomical fee for the project (10s if not 100s of thousands of pounds). Probably the hardest turn around i’ve done thus far here getting all this to fit together and work.

We also had issues with procuring computers with powerful enough Graphics Cards to run the Azure Kinect sensors (a key reminder that order by request does not guarantee you that product at the end, even after payment.) Thankfully we had a computer delivered before install week, months after putting in the order. It all pulled together in the end and we got a fantastic developer Louis d’Aboville, he’s done numerous projects with Touchdesigner and has done a fantastic job in this project.

Development/Installation

Once we had the project green lit and the purchases made, the software development began from Louis, which with his use of Touchdesigner has proven to give us a changeable, upgradable and robust system that achieved this complex project. Following development of the software being finished, we began the install process of the hardware in July, where the bulk of the install work was done. Alongside this in July the content development was given to Kilogramme, who did a stellar job working with the constraints of the content needed in order for it to work with the system. Particularly with making content the right lengths to make triggering the interactive quick whilst keeping continuity throughout by using the same start and end frames, all whilst making the animation look convincing.

Because of how the pandemic was at this time planning out a timeline for this that would fit in with other obligations of staff was nigh impossible, so getting the install date nailed down took awhile and remobilsation work of getting our sites reopened and fully running had to take precedent as well as exhibitions such as Vanguard Street Art and Bristol Photo Festival also draining our capacity. So I would again like to thank both Louis and Kilogramme for the work done with an ever changing set of dates for key work to be completed.

And as of October 2021 we launched the interactive to the public!

Where we plan to go from here?

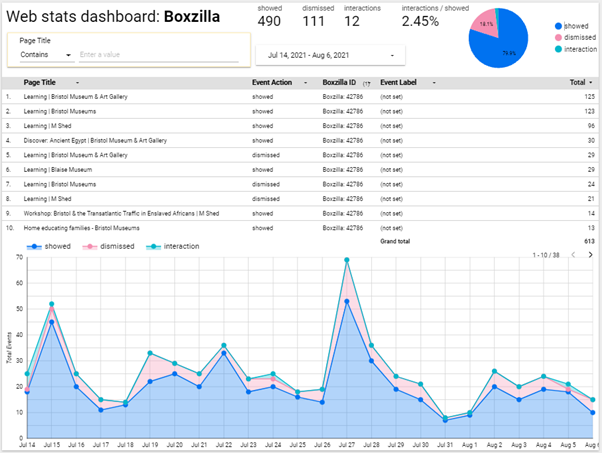

We don’t plan to stop after the initial launch. As the system was designed with flexibility we wish to use analytics integrated by the developer to figure out how to improve the system over time. Over time we can figure out how to optimise the gesture recognition by walking the line between robustness and sensitivity to the various types of human waving. We can also use signage in the interactive area to drop visual cues on how to best interact with the system. We can also add themes to the content in festive periods such as Christmas with snow, halloween with pumpkins, easter with eggs, etc. On top of this there is still more we could do with the system over time.

I believe this system shows the capability of Touchdesigner being used in museums. The ability for it to cover most types of interactives that would be made in museums, whilst being a piece of development software that i think most technicians could pick up themselves over time. It has numerous uses apart from using sensors, cameras and video content. It can manipulate content, projection map and do real time 3d content, all of these elements can be linked in to each other in one project, in realtime. A good video showing the use of this in museums can be seen here.

I have been learning the software myself and have been able to pull off some basic interactivity using the Azure Kinect and other sensors and in time I aim to be able to build on this knowledge and apply it in the museum where and when possible, to pioneer new interactivity on our sites.

A Special Thanks to Bristol Museums Development Trust and Arts Council England for making this possible.